exseveritate

side-projects severin bothered to put on the web

-!-

Box Banging

test project using an AR framework I've been building for a music project. The framework can integrate pose and facial tracking from various sensors into a single human pose model (though in this project it is using only Kinect2 pose tracking)

the view is a point cloud combining data from the Kinect depth camera, and a Unity game engine depth camera, rendering both real-world and game engine objects (the eyeballs) in a unified view with correct occlusion etc

the boxes are triggers in the game engine space which send MIDI notes to a DAW when triggered with the correct skeletal joints or gestures, or MIDI continuous controller messages based on the position of the joints within the trigger volumes (e.g. the pitch bends as i move my hands through the boxes)

the point cloud uses a vertex shader to displace the points in defined areas for the shaking and vibration effects, the interface is like a synth with ADSR envelopes and waveform selection for each effect, triggered by MIDI messages from the gesture tracking system

box banging pic.twitter.com/yApnvbv9U9

— exseveritate (@exseveritate) November 4, 2024

Summon drumming in the point cloud 🔊 pic.twitter.com/yGA8bZPPez

— exseveritate (@exseveritate) November 3, 2024

-#-

!

i may have some neurological issues...

vtube character controlled by my movements and facial expressions and some foot controllers, based on human nervous system. Built it after a discouraging visit with my neurologist. Not really visible in this video, but when any part of the body moves (fingers, arms, legs, facial features), blue signals travel from the brain down the nerves to that body part

my brain is broken

— exseveritate (@exseveritate) December 7, 2023

(🔊) pic.twitter.com/upUGPhFOqK

-#-

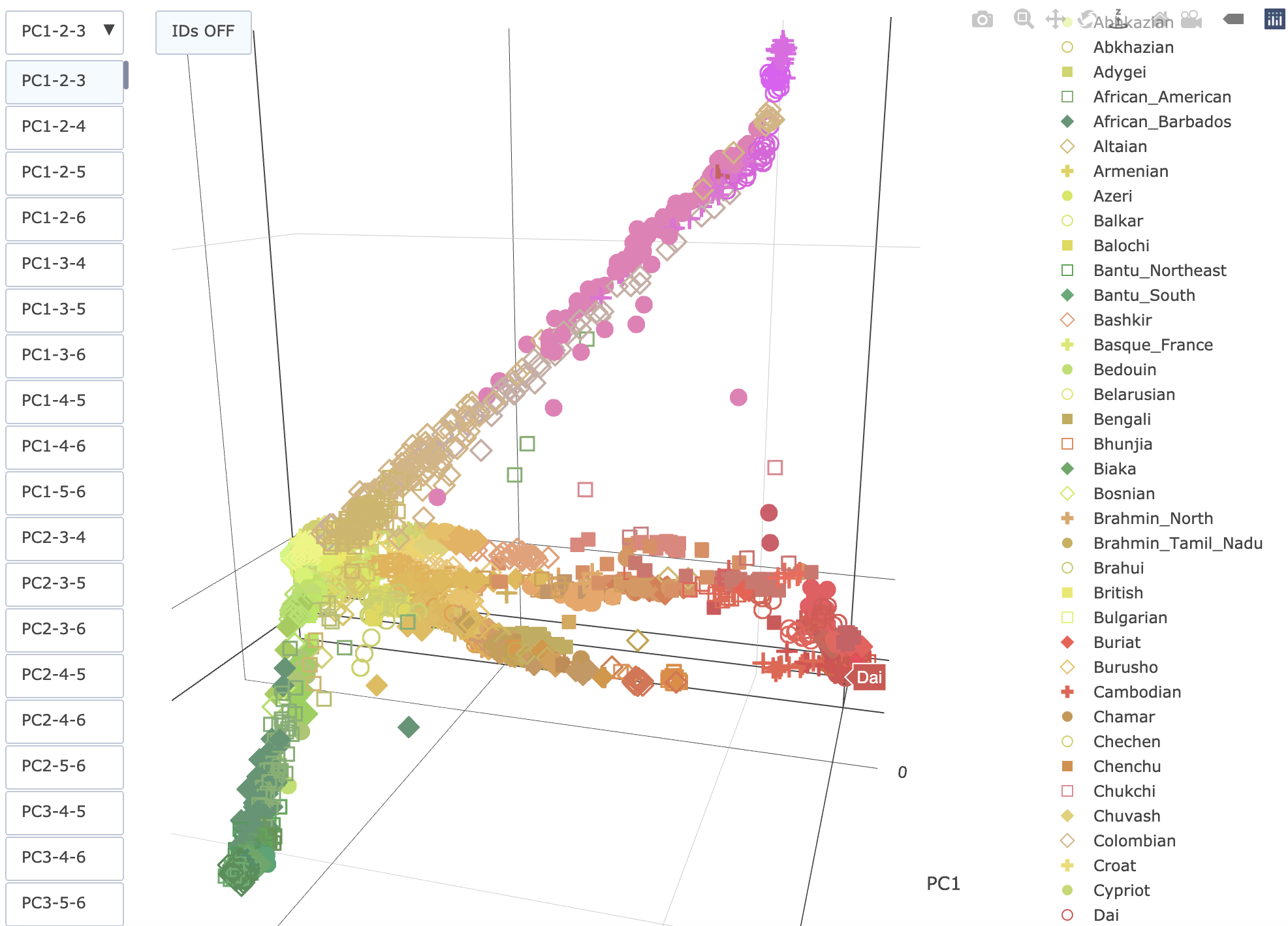

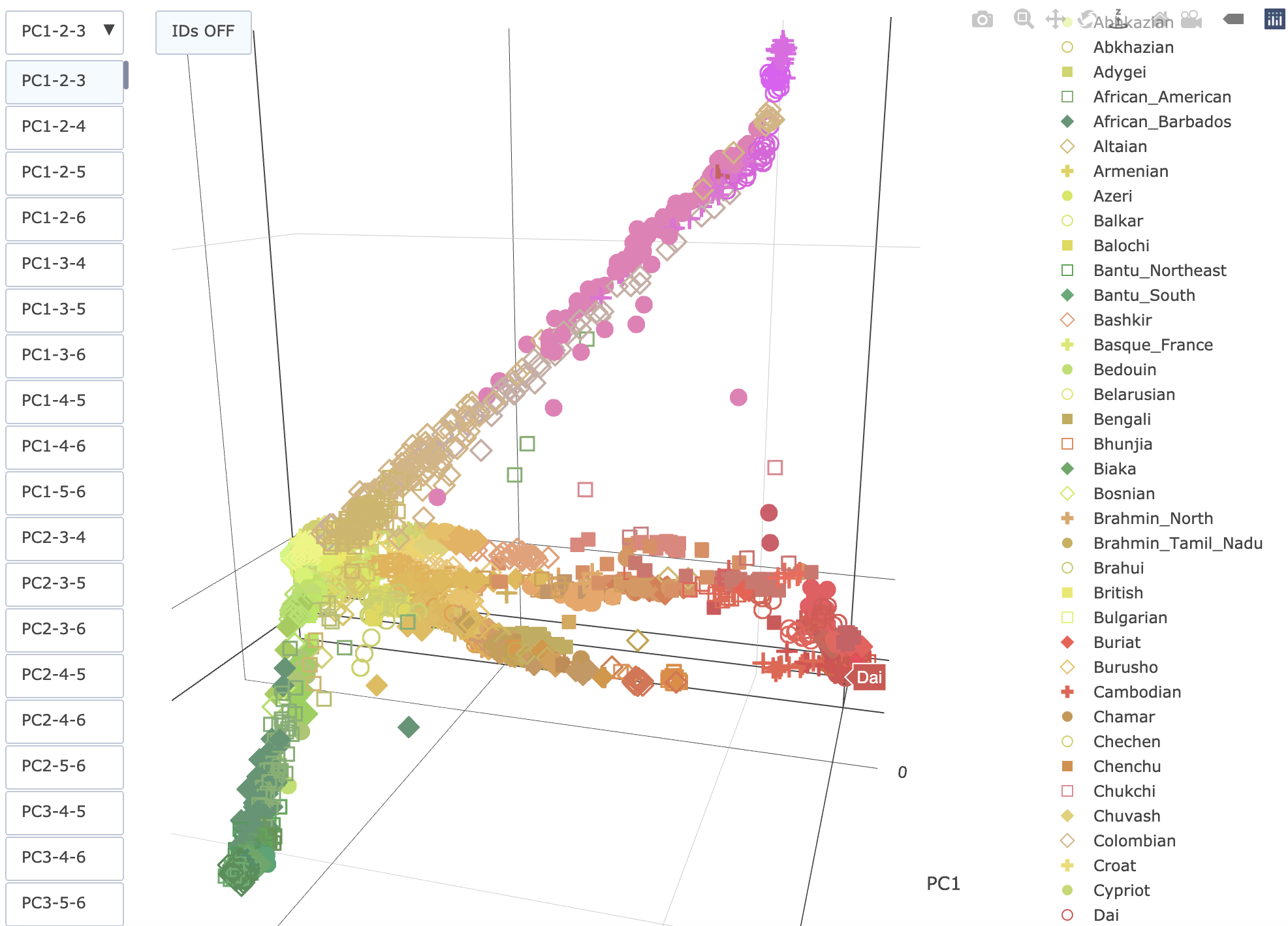

World Genomes 3D PCA Viewer

interactive 3D PCA viewer for sample of 5k human genomes sourced from 1000 Genomes Project, Human Genome Diversity Project, and various University of Tartu papers.

graph display uses plotly. PCA analysis by plink, data post-processing done in python with pandas. click and drag to rotate, scroll to zoom, shift+click+drag to pan. hover over points for sample info.

genomics papers try to give an overview of the genetic relationships between various human groups by plotting a series of 2D PCA graphs. This is an attempt to show the relationships in 3D, and allow you to switch between various combinations of 3 Principal Components while keeping the same colour pallette in all views, to help orient the views to each other. Color pallette is based on the average coordinates on first 3 PCs of each group, with a random offset added to disambiguate similar groups. Code for processing the data to produce the palette is up at github

-#-

Paku Paku

A project by noise musician Lucas Abela, where users control hovercraft pacman and ghosts in a room-sized maze

I made a system for this that tracked the hovercraft in the maze using a grid of infrared cameras on the roof and registration infrared LEDs on the hovercraft, and then this system switched on/off the lights on the floor of the maze as the pac-man drove over them, like pacman eating the pellets in the original game (this control system used a bunch of arduinos). Unfortunately all of the footage in the youtube vid is of the ghost hovercraft, which doesn't 'eat' the pellets. I also made a game view based on the simulated model of the real world maze which was projected on the wall.